Imagine your engineering organisation wakes up tomorrow with 100x its current execution capacity.

Not more people. More output.

Backlog items that would have taken quarters can be built in weeks. Entire workflow variations can be assembled and deployed without negotiating headcount. Experiments that once required roadmap trade-offs no longer do.

What breaks first?

Most executives would instinctively point to budget, infrastructure limits, or operational overhead.

They would debate tooling. They would worry about operational load.

That is not where the system breaks.

It breaks in how decisions are made.

For decades, engineering strategy was shaped by scarcity. Build capacity was limited. Integration was painful. Reversing direction was expensive. That reality forced discipline.

That constraint shaped our instincts.

We learned to debate before building. To model ROI before shipping. To protect shared codebases because they were expensive to evolve.

That logic worked in scarcity. In abundance, it slows learning.

When generation becomes cheap, production capacity stops being scarce. Governance becomes the constraint on capital. The question shifts from “Can we build this?” to “Are we allowed to run this?”

That shift is not procedural. It changes how capital moves inside the company.

The Compounding Advantage of Faster Signal

When the cost of building drops, the cost of being wrong changes.

If experiments can be launched and deleted quickly, signal arrives earlier. Earlier signal means capital moves sooner. It doesn’t wait for the next planning cycle to admit it was wrong.

Over time, that compounds. Organisations that shorten the distance between assumption and proof reallocate faster. Those that continue to gate decisions through long planning cycles will look disciplined, but they will learn more slowly. In markets where competitors can generate and validate evidence faster, slower organisations will appear well managed right up until they fall behind. The gap will not be visible in velocity. It will be visible in decisions.

The engineering model determines whether you compound or stall.

Figure 1: The Compounding Advantage. Abundance leads to faster signal, enabling smarter capital allocation and compounding growth.

Figure 1: The Compounding Advantage. Abundance leads to faster signal, enabling smarter capital allocation and compounding growth.

Where It Breaks

If governance, contracts, and lifecycle tooling do not scale with capacity, coordination will erase the gains. In a high-velocity environment, operational overhead will compound faster than output, and usable velocity will stall even as activity increases.

Without reversibility, abundance becomes drag as operational overhead increases.

The Unit of Experimentation Changes

In a scarcity model, experimentation happens inside a shared codebase.

One main branch. Feature branches. Flags controlling exposure. A protected asset that must converge back to one reality.

That works when experimentation is expensive.

In abundance, the unit of experimentation shifts.

It is no longer the code path. It is the deployable artifact.

An experiment becomes a full vertical slice: logic, workflow, sometimes UI, deployed independently behind a stable contract. When the experiment ends, the artifact is deleted. Not toggled. Not half-retired. Removed.

Reversibility becomes a first-class operation.

If you cannot delete a production artifact without destabilising the business, you are still operating in managed scarcity. I have watched features survive for a year longer than they should have because unwinding the integration felt riskier than living with the drag. Most organisations reward shipping. Very few reward removal.

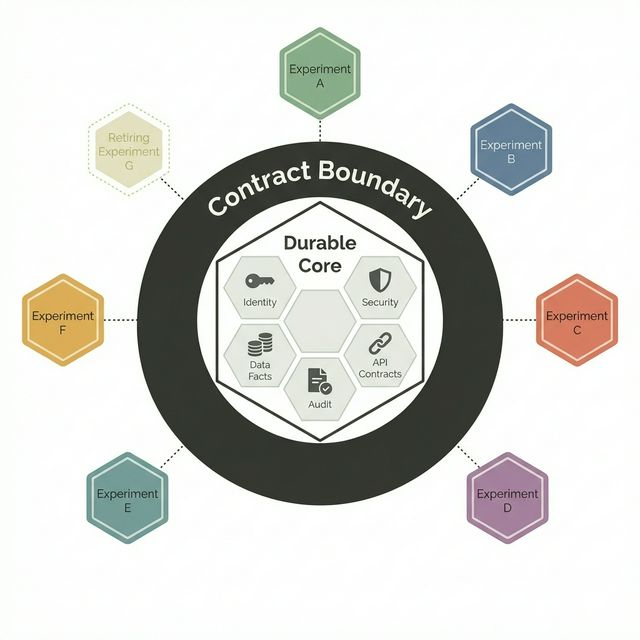

Architecture: Invariants at the Center, Change at the Edge

There is one runtime core.

The core exists to protect invariants:

- Canonical data facts

- Identity and permission boundaries

- Security invariants

- Audit trails

- API and event contracts

The core evolves slowly and deliberately. It is versioned over time, but only one version is live. Backward compatibility is a discipline, not an afterthought.

Experiments sit at the edge. They depend on the core’s contracts but do not redefine them.

The strategic boundary is no longer the main branch. It is the contract boundary.

In abundance, contracts encode governance.

Data: Facts Are Durable, Interpretations Are Disposable

Scarcity assumes one linear schema evolution. Migrate everything forward together. One projection of reality at a time.

In abundance, that model becomes fragile.

Facts must remain durable. Immutable records of what happened.

Interpretation can vary.

Experiments derive their own projections, read models, or workflow-specific views from the same underlying facts. When an experiment dies, its interpretation disappears. The facts remain.

Schema drift changes structure. Semantic drift changes meaning, and meaning is what learning depends on.

In abundance, noise scales faster than signal unless meaning is enforced.

Abundance demands stricter semantic discipline than scarcity ever did.

Production Design: Durable and Modular Core, Disposable Experiments

Microservices were a rational response to coordination challenges in scarcity. They optimise reuse and independent deployment of long-lived components.

In an abundance model, the core may remain modular, even microservice-heavy if needed. That is where durability belongs.

But experiments should minimise operational surface area.

A disposable artifact for an experiment that spawns five services, three data stores, and its own internal routing layer is not abundance. It is overhead.

By default, experiments should bias toward modular monoliths: one deployable, internally structured, easy to observe, easy to delete.

Without strong contract enforcement and automated lifecycle tooling, parallel artifacts create entropy faster than they create learning.

Abundance reduces build cost but it increases operational complexity. Strategy should counterbalance that, not amplify it.

Figure 2: One Durable Core, Disposable Experiments. The core remains stable while experiments at the edge are spun up and retired rapidly.

Figure 2: One Durable Core, Disposable Experiments. The core remains stable while experiments at the edge are spun up and retired rapidly.

Routing: N Variants, Clean Attribution

Blue-green deploys assume two known states. Abundance allows many.

Multiple artifacts can run simultaneously, each serving a defined cohort. Routing becomes experiment-aware, not simply version-aware.

But parallelism without attribution is chaos.

Every request, every event, every metric must be cohort-tagged. Variant identity must be visible internally. Support must be able to answer “what is this customer seeing?” in one click.

If attribution is muddy, experimentation collapses into debate.

Abundance without clean signal is noise at scale.

Support: Two Phases

Abundance changes the cost structure of ownership.

During experiment:

- The product owner owns supportability.

- Clear behavioural boundaries and rollback paths are defined upfront.

- Variant identity is visible in the UI.

- AI-assisted tooling handles triage, telemetry lookup, and reproduction.

This keeps experimentation cheap without creating internal confusion.

At promotion, the ownership model changes:

- Full documentation and training become mandatory.

- Support ownership formally transfers.

- SLAs and enablement standards apply.

- The feature becomes part of the stable narrative.

If experiments require full production-grade enablement upfront, experimentation will be capped by coordination, not engineering capacity.

Platform Becomes Strategy

When capacity was scarce, product and engineering coordinated through process.

In abundance, coordination happens through platform.

Platform engineering becomes the steward of the slow path:

- Contract enforcement

- Policy-as-code gates

- Cohort routing infrastructure

- Artifact lifecycle tooling

- Telemetry standards

- Kill switches

Many organisations still treat platform as infrastructure overhead rather than strategic leverage. In an abundance model, that assumption becomes expensive. If the platform is weak, experimentation slows. If the platform is strong, governance scales.

Its mandate is not to slow change. It is to make change cheap without increasing systemic risk.

The fast path belongs to product engineers running experiments.

The slow path belongs to platform, protecting invariants.

That is not an org chart tweak. It is a strategic shift in where power sits.

The Discipline of Abundance

Abundance is cheap in code generation. It is expensive in coordination.

Deletion must be safe. Attribution must be clean. Contracts must be enforced. Because when any of those weaken, parallelism stops being an advantage and starts becoming operational drag.

If engineers could spin up fifty production-ready artifacts this quarter, what would stop them?

If the answer is meetings rather than invariants, the architecture has not caught up to the economics.

Abundance is not about building everything.

It is about designing a system where most of what gets built is allowed to be wrong, quickly, without destabilising the core.

That is engineering strategy in the age of abundance.